In today’s online environment, users are spoilt for choice when it comes to applications and websites. Companies strive to attract customers and maintain their business, and many successfully do so on one platform or another. However, the problem arises when the user has to navigate between these solutions and the experience doesn’t carry over. For instance, the app experience for an online service may be stellar as compared to the website, and the gap between the two can be damaging. This is where progressive web apps (PWA) come into play.

Simply put, progressive web apps are websites that have been designed to resemble an application. There are many benefits to such a technology, with data backing the claim that users prefer mobiles apps to websites. This makes sense, considering that applications are generally easier to navigate, offer better personalisation, and offer the ability to work offline. These are just some of the features that a PWA leverages to provide users with an enhanced experience.

For a deeper dive into why progressive web apps are the future of web design and development, take a look at these pointers.

Heightened security

For any type of web provision, security is among the top priorities. Not only does it protect the user base from malicious attacks but also inhibits the lasting damage that these cyber-attacks could lead to. What’s more, search engines like Google encourage web services to have the right type of security provisions in place. Failing to do so has its drawbacks and users are often notified of any shortcomings in this regard.

Progressive web apps don’t have to worry about such security concerns, so long as the website is using HTTPs. This helps them enjoy the same security benefits and ensures that interactions happen in a controlled environment. The benefit of this heightened security is that it allows for more diversified application. Users can now comfortably enter sensitive personal or financial information into progressive web apps without worrying about it being stolen or exposed.

Offline functionality

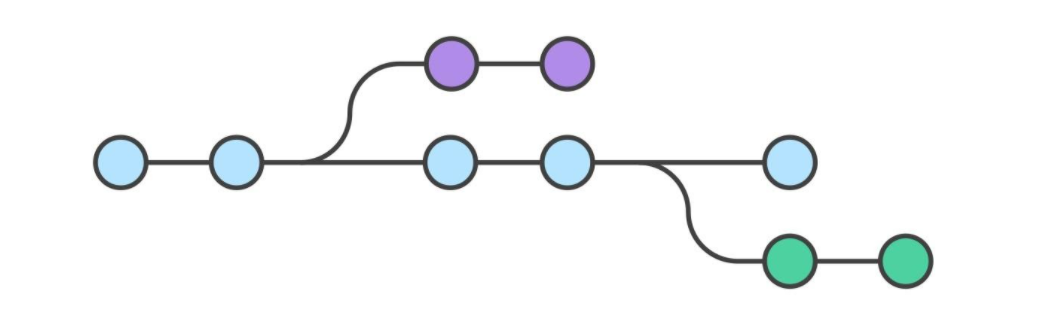

Among the key reasons why progressive web applications are regarded as the future of web applications is because it bridges the gap between online and offline access. Thanks to the use of a proxy server known as a ‘ServiceWorker’, developers are able to offer users an offline experience. This is also considered semi-offline in some cases, but the key takeaway is that it works offline.

Unlike a traditional web application or website, a PWA doesn’t rely completely on having an active network to work. It leverages content caching to facilitate offline function. Over to the side of development, this is all possible due to the Java scripts mentioned above. These can respond to connectivity changes and network requests, while operating independently from the application.

Better user experience and conversions

Providing a simple, easy, and hassle-free user experience is the end goal for any application. Progressive web applications particularly excel in this regard because of ServiceWorkers. These unique event-driven scripts allow granular caching and speed up the application greatly. In fact, with proper optimisation, a developer can ensure that the PWA loads almost instantly and is easy to navigate, even without a network connection.

These benefits further translate into conversions for companies that leverage the power of a PWA. A great example is AliExpress, a company which was able to increase its conversions by 104% for new users. Besides this, there was a 74% increase in the time spent per session by users on their PWA. These numbers showcase the power of a PWA, but it isn’t the only success story. Another notable conversion metric PWAs were able to deliver on was user engagement. Saudi retailer eXtra Electronics was able to generate an additional 100% sales through the PWA via web push notifications.

Cost-effective development cycles

There are numerous testimonies to back the claim that PWAs are cost-effective. Nicolas Gallagher, the Engineering Lead for Twitter Lite, claims that it is the least expensive way to use Twitter. Further, developing a PWA benefits the parent company because it is much cheaper to build as compared to a native application.

To offer perspective, on average, a PWA can cost between $3,000 and $9,000, whereas a native application will cost around $25,000 to develop. Aside from the cost, a progressive web application takes less time to develop. This is particularly valuable to startups as it promises a much quicker ROI.

Universality

Progressive web apps are solutions that combine the features of a website and an app. Among the key benefits is that these apps can run on any device or operating system as it utilises the browser. This helps provide a seamless user experience across devices as developers are required to optimise for the browser. Unlike a traditional app, this reduces the need for heavy-duty downloads and saves on platform real estate.

A good example is Pinterest’s PWA, as it’s only around 150KB in size, as compared to the native app, which is around 56MB for iOS and over 9MB for Android. Besides saving on the file size, PWAs are also universal because there’s no need for any type of installation. Think of it as a plug-and-play solution that businesses can fall back on across all devices with a browser.

Personalised interactions

PWA developers have the freedom to personalise user experiences in a more flexible manner than is possible with a traditional app. This improves customer loyalty and engagement by a great deal and one of the best examples of this technology are push notifications.

To web developers across the world, progressive web apps check all the boxes. They are fast, cross-platform compatible, reliable, and tightly integrated. What’s more, companies are slowly catching on and making PWA development a priority for their online services. This means that there’s a growing demand for developers and the scope for innovation is massive. With the required skill set, you could very easily find yourself developing the next big thing!

To better position yourself to achieve this type of success and work amongst the best in the industry, sign up for the Talent500 platform. Our algorithms intelligently align your profile with leading Fortune 500 companies, across the world, thus enabling you to be on the frontline of innovation.